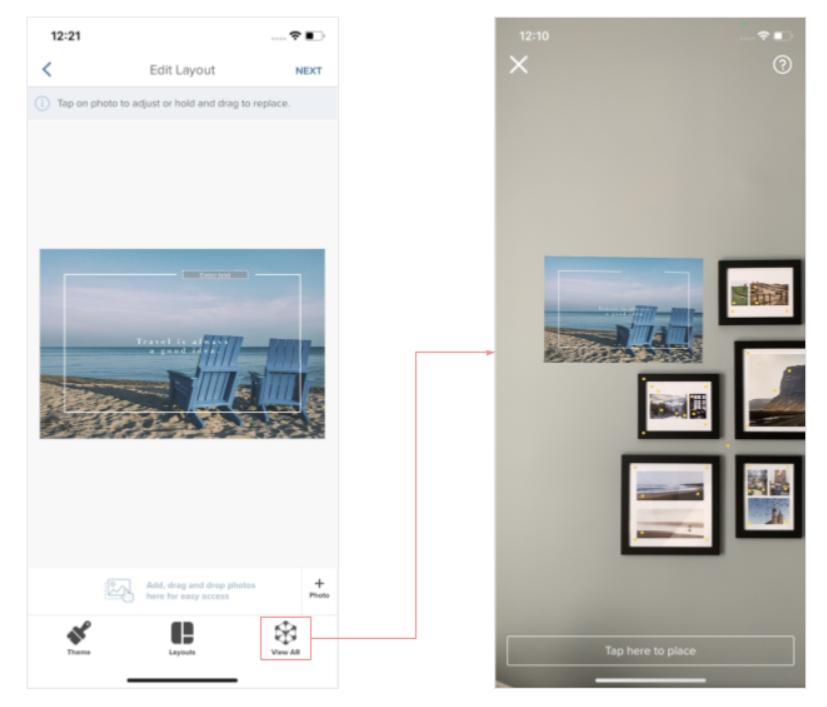

In the Photobook iOS app, an augmented reality feature is implemented to showcase the Canvas products. Users can preview their Canvas project through the “View AR” option in the app’s project editor to have a glimpse of how it looks in the real world. Simply find a desired wall, press “Tap here to place” and the Canvas will be hanging at the pointed wall in 3D mode with accurate physical dimensions. The physical dimensions include the depth of the Canvas.

To achieve this, we use the ARKit library provided by Apple. Using ARKit requires the device to be at least iOS 11.

The Measurements

ARKit measures the dimensions by meters. Our project editor is backed by the Printbox engine. The app translates the product workspace data in millimeters from the Printbox engine to meters in ARKit. The measurements include:- Canvas width

- Canvas height

- Canvas depth

| Printbox workspace data | Translated data for AR | |

| Canvas width | 457mm | 4.57m |

| Canvas height | 305mm | 3.05m |

| Canvas depth | 35mm (bleed) | 0.35m |

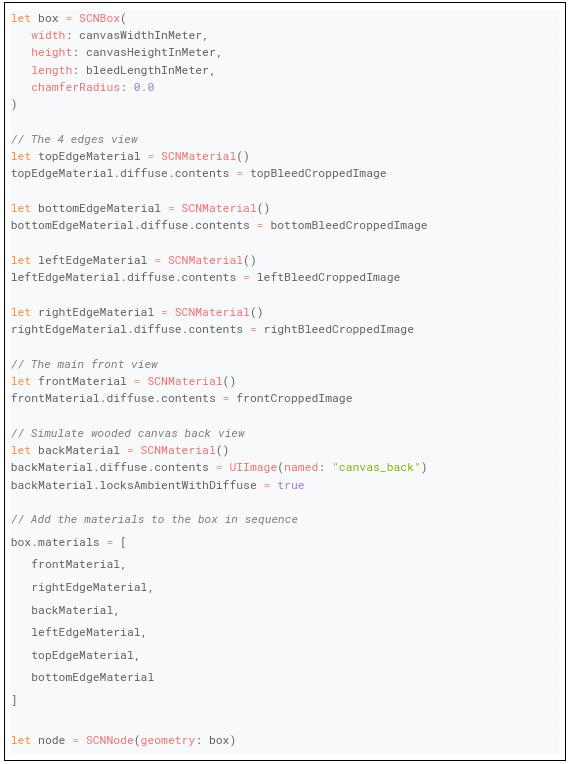

Creating the Canvas Object in 3D

Essentially, canvas is just a simple rectangular box in 3D. The 3D box is built with SceneKit’s SCNGeometry object. It breaks down to:- SCNBox - Defines the shape of the box in x-, y-, and z-axis manner

- SCNMaterial - Defines the surface material on each side of the 3D object

- SCNNode - Defines the position and angle of the 3D object

Blending into Augmented Reality

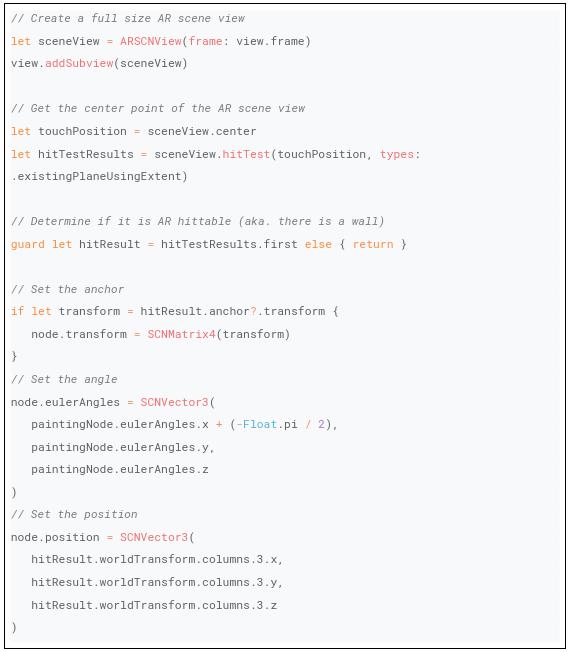

The created SCNNode object is then added to ARSCNView to blend the 3D object from SceneKit into augmented reality. The coordinates to render the 3D object is determined by the center point of the camera. The pointer needs to be an “AR hittable” state which also means there is a wall as determined by ARSCNView.Below is the sample code of blending the node into augmented reality view: